ForumIAS announcing GS Foundation Program for UPSC CSE 2025-26 from 19 April. Click Here for more information.

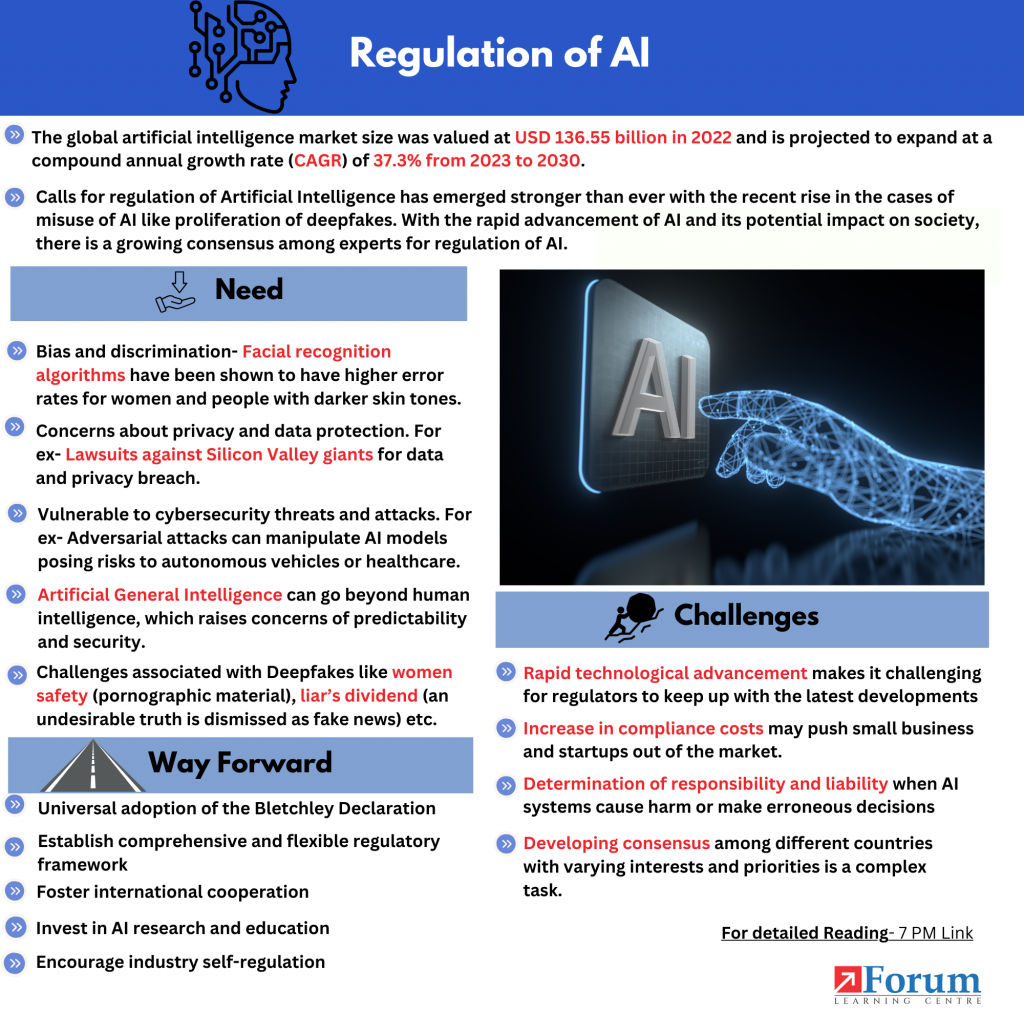

Calls for regulation of Artificial Intelligence has emerged stronger than ever with the recent rise in the cases of misuse of AI like proliferation of deepfakes. With the rapid advancement of AI and its potential impact on society, there is a growing consensus among experts that regulation is necessary to ensure responsible and ethical use of AI technology.

| Table of Contents |

| What is the need for regulation of AI? What are the challenges in regulation of AI? What is the status of regulation of AI in India and across the globe? What should be the way forward? |

What is the need for regulation of AI?

1. Bias and discrimination: AI systems can inherit biases from the data they are trained on, leading to discriminatory outcomes. For ex- Facial recognition algorithms have been shown to have higher error rates for women and people with darker skin tones.

2. Lack of transparency- Many AI algorithms operate as black boxes, making it difficult to understand how they reach their decisions. For ex- Medical AI system recommending a specific medical treatment but cannot explain its reasoning.

3. Privacy and data protection- AI systems rely on vast amounts of personal data, raising concerns about privacy and data protection. For ex- Lawsuits against Silicon Valley giants for data and privacy breach in their AI systems.

4. Security risks- AI systems can be vulnerable to cybersecurity threats and attacks. For ex- Adversarial attacks can manipulate AI models posing risks in critical domains such as autonomous vehicles or healthcare.

5. Ethical considerations- AI raises ethical questions related to the impact on jobs, social inequality, and concentration of power. For ex- automated decision-making in hiring processes have shown to perpetuate existing biases and result in unfair outcomes.

6. Artificial General Intelligence– AGI can self-learn and go beyond human intelligence, raising concerns of predictability and security.

7. Autonomous Weapons Development- These machines have the potential to make life-and-death decisions without direct human intervention, leading to ethical dilemma regarding the value of human life.

8. Mass State Surveillance: AI, equipped to conduct facial recognition and analyze extensive data, will empower governments to maintain round-the-clock profiles of citizens. This will make dissenting against governments difficult.

9. Challenges associated with Deepfakes generated using AI- There are concerns about women safety (morphed pornographic material), liar’s dividend (an undesirable truth is dismissed as fake news) and fuelling radicalisation and violence (Fake videos showing armed forces committing ‘crimes in conflict areas’).

| Read More- Deepfakes- Explained Pointwise |

What are the challenges in regulation of AI?

1. Rapid technological advancement- AI is evolving at a rapid pace, making it challenging for regulators to keep up with the latest developments and effectively regulate a technology that is constantly evolving.

2. Complexity and development- Creating effective regulations that address the intricacies of AI systems and keep pace with technological advancements is a considerable challenge.

3. Increased costs and competition- Compliance with regulations may impose additional costs on businesses. This disproportionately effects smaller companies and startups, limiting their ability to compete in the AI market.

4. Accountability and liability- Determination of responsibility and liability when AI systems cause harm or make erroneous decisions is also a considerable challenge.

5. International cooperation- Developing consensus among different countries with varying interests and priorities is a complex task.

What is the status of regulation of AI in India and across the globe?

India

a. Digital India Framework- India is developing a comprehensive Digital India Framework that will include provisions for regulating AI. The framework aims to protect digital citizens and ensure the safe and trusted use of AI.

b. National AI programme- India has established a National AI Programme to promote the efficient and responsible use of AI.

c. National Data Governance Framework Policy- India has implemented a National Data Governance Framework Policy to govern the collection, storage, and usage of data, including data used in AI systems. This policy will help ensure the ethical and responsible handling of data in the AI ecosystem.

d. Draft Digital India Act- The Ministry of Information Technology and Electronics (MeitY) is working on framing the draft Digital India Act, which will replace the existing IT Act. The new act will have a specific chapter dedicated to emerging technologies, particularly AI, and how to regulate them to protect users from harm.

Rest of the World

1. European Union- The European Union is working on the draft Artificial Intelligence Act (AI Act) to regulate AI from the top down.

2. United States- The White House Office of Science and Technology Policy has published a non-binding Blueprint for the Development, Use, and Deployment of Automated Systems (Blueprint for an AI Bill of Rights), listing principles to minimize potential harm from AI.

3. Japan- Japan’s approach to regulating AI is guided by the Society 5.0 project, aiming to address social problems with innovation.

4. China- China has established the “Next Generation Artificial Intelligence Development Plan” and published ethical guidelines for AI. It has also introduced specific laws related to AI applications, such as the management of algorithmic recommendations.

What should be the way forward?

1. Universal adoption of the Bletchley Declaration- The push must be made towards universal adoption of the Bletchley Declaration by all the countries.

| Read More- Bletchley Declaration |

2. Establish comprehensive and flexible regulatory framework-: The governments should develop clear guidelines and laws that address various aspects of AI, including data privacy, algorithmic transparency, accountability, and potential biases.

3. Foster international cooperation- Given the global nature of AI and its potential impact, collaboration among countries is essential. International standards and agreements should be developed to promote ethical practices and ensure consistency in regulation across borders. In this respect, the G7 Hiroshima AI Process (HAP) could facilitate discussions.

4. Encourage industry self-regulation- Companies involved in AI development should take responsibility for ensuring the ethical and responsible use of their technologies.

5. Invest in AI research and education- Governments, academic institutions, and industry stakeholders should allocate resources to R&D, and education in the field of AI. This will help create a well-informed workforce capable of addressing regulatory challenges and ensuring the safe and responsible deployment of AI technologies.

| Read More- Indian Express UPSC Syllabus- GS 3: Science and Technology – developments and their applications and effects in everyday life |